|

I am a Research Scientist at Amazon where I work on Machine Learning and Optimization Algorithms that operate at scale. Previously, I was an undergraduate student at BITS Pilani Goa, where I studied Electronics. I specialized in the field of Artificial Intelligence (unofficially). While at BITS, I was also the President of Society for Artificial Intelligence and Deep Learning and a part of APP Centre for AI Research where I regularly collaborated on projects, assisted in courses and helped incubate AI research. |

|

|

|

|

|

|

|

|

|

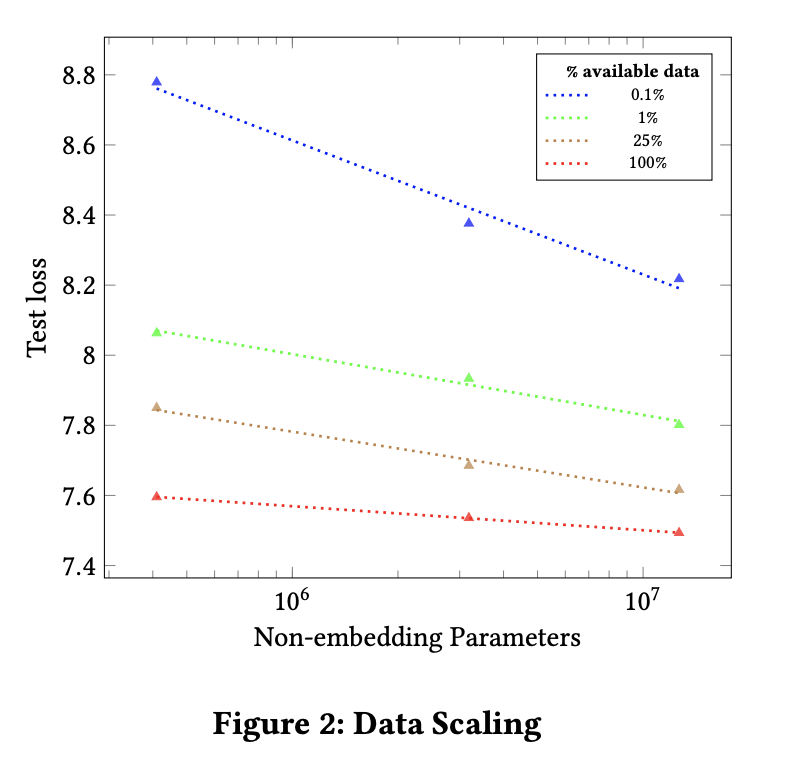

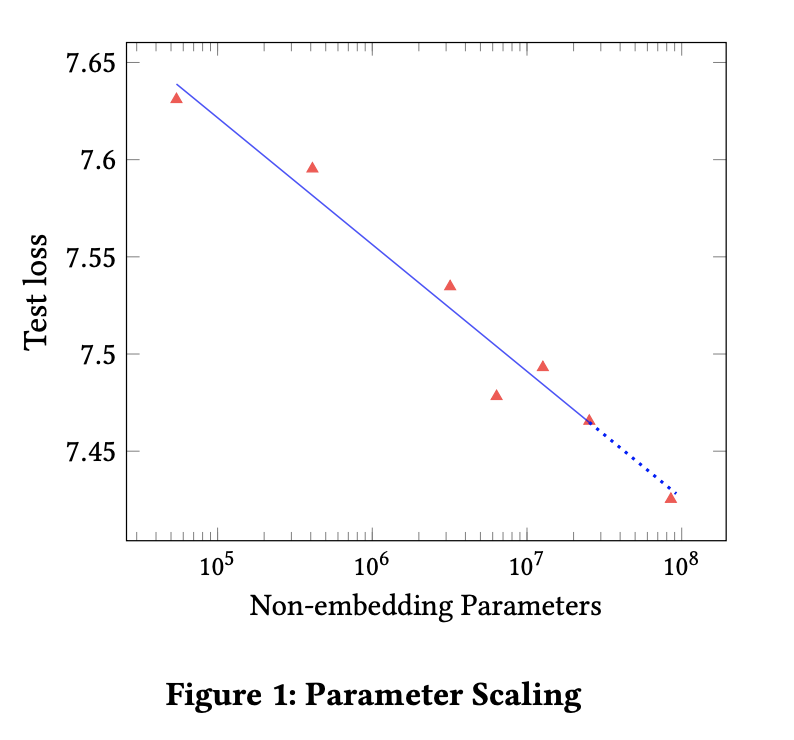

17th AdKDD (KDD 2023 Workshop on Artificial Intelligence for Computational Advertising) Sharad Chitlangia, Krishna Reddy Kesari, Rajat Agarwal We demonstrate scaling properties across model size, data and compute on User Activity Sequences data. We also demonstrate that larger models have better downstream task performance on critical business relevant tasks such as response prediction and bot detection. PDF Talk BibTeX |

|

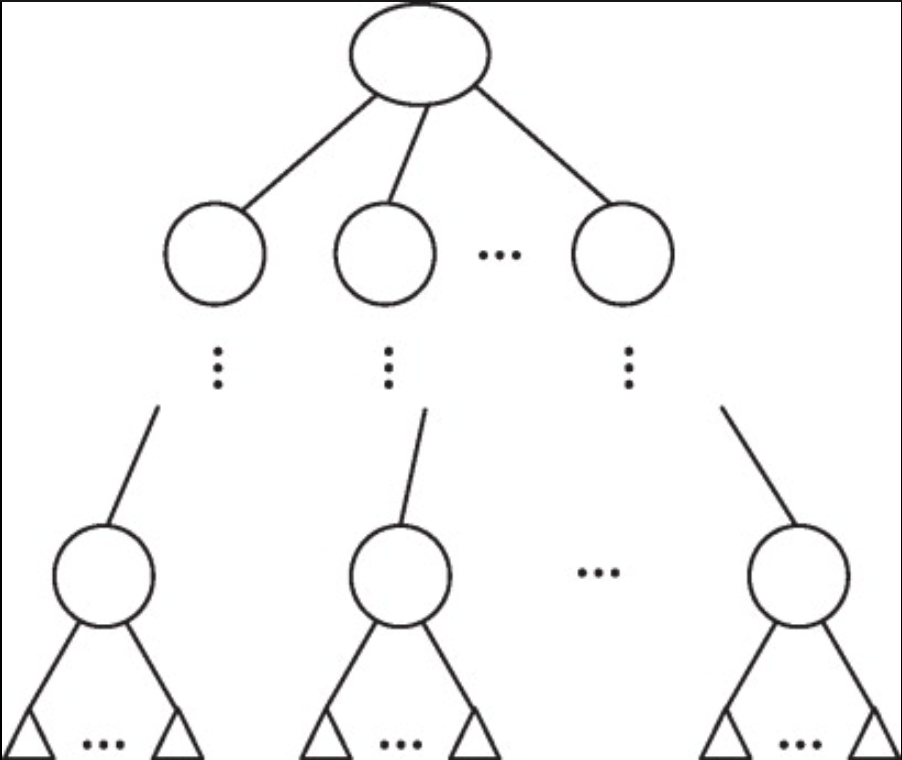

KDD 2023 Workshop on Artificial Intelligence-Enabled Cybersecurity Analytics Rajat Agarwal, Sharad Chitlangia, Anand Muralidhar, Adithya Niranjan, Abheesht Sharma, Koustav Sadhukan, Suraj Sheth We introduce a 3 tiered framework for learning and generating explainable network request signatures to explain black box robot detection decisions PDF BibTeX |

|

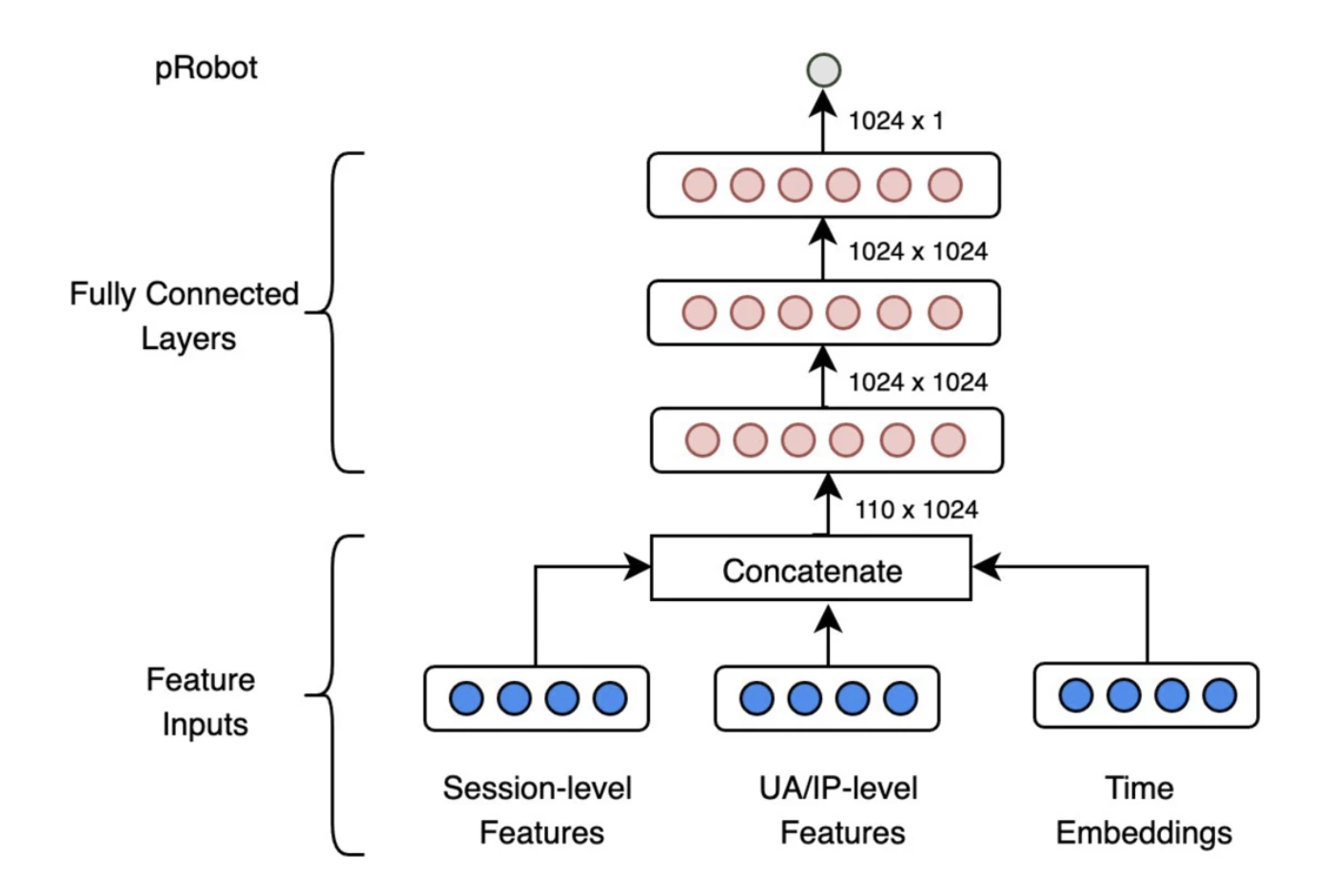

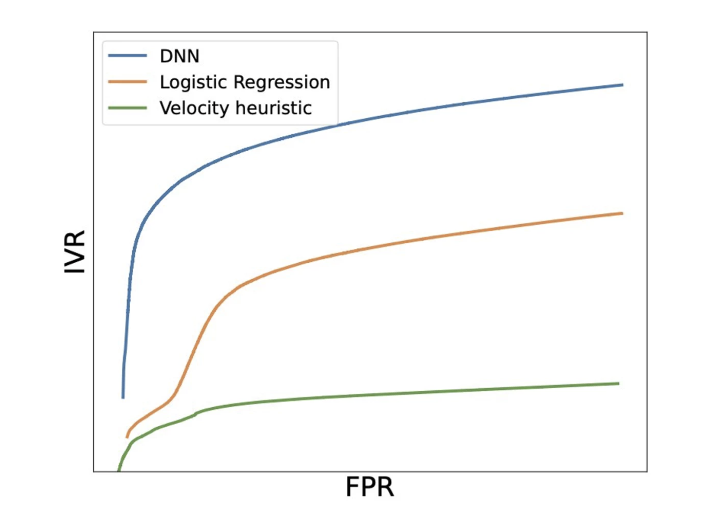

Innovative Applications of Artificial Intelligence, AAAI 2023 Anand Muralidhar, Sharad Chitlangia, Rajat Agarwal, Muneeb Ahmed We introduce an approach towards detection of bot traffic that protects advertisers in realtime from online fraud. We also introduce an optimization framework towards optimal performance across various business slices. We discuss various engineering aspects including its offline re-training methodology, realtime inference infrastructure and disaster recovery mechanisms. PDF Talk Blog BibTeX |

|

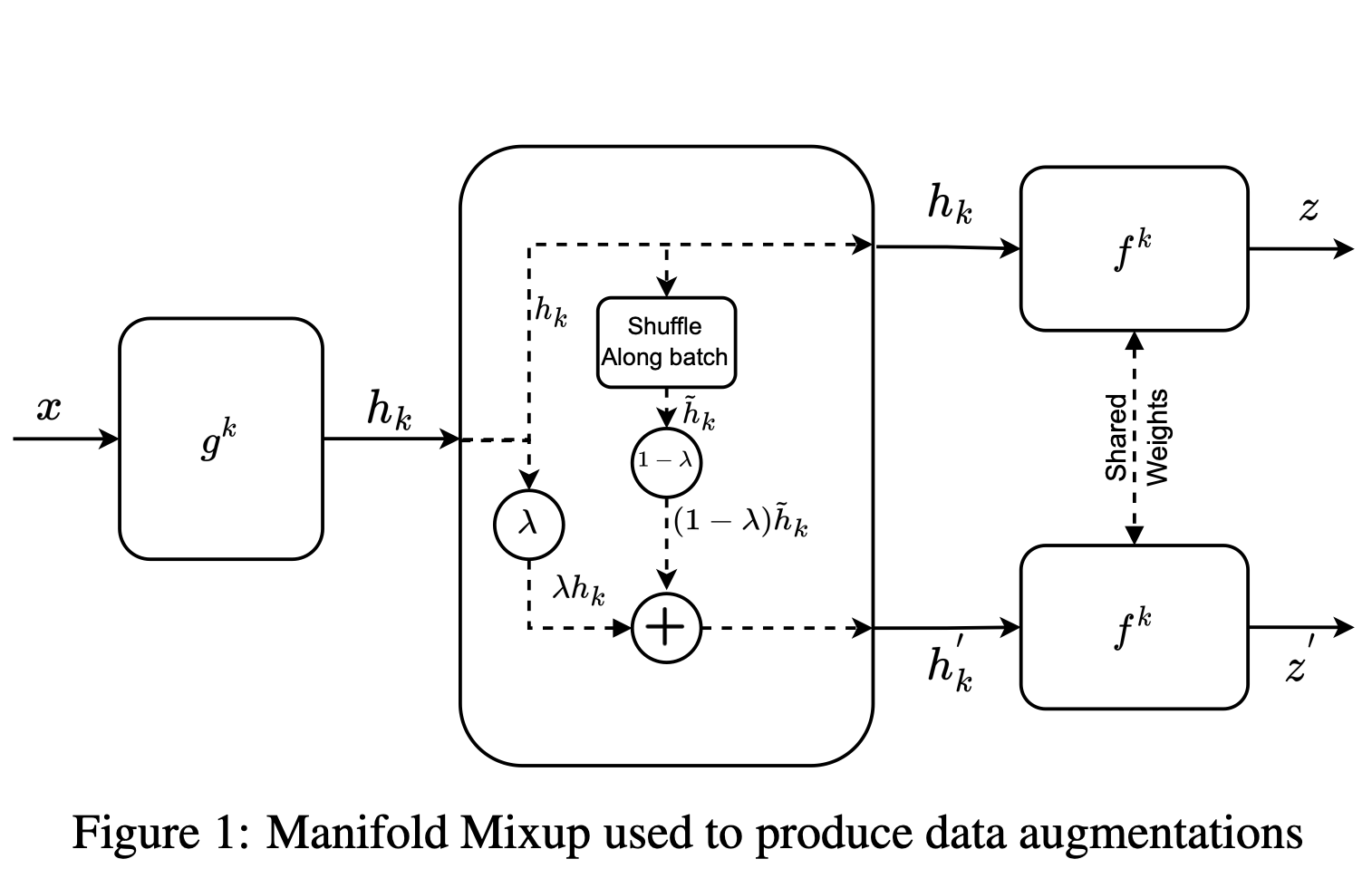

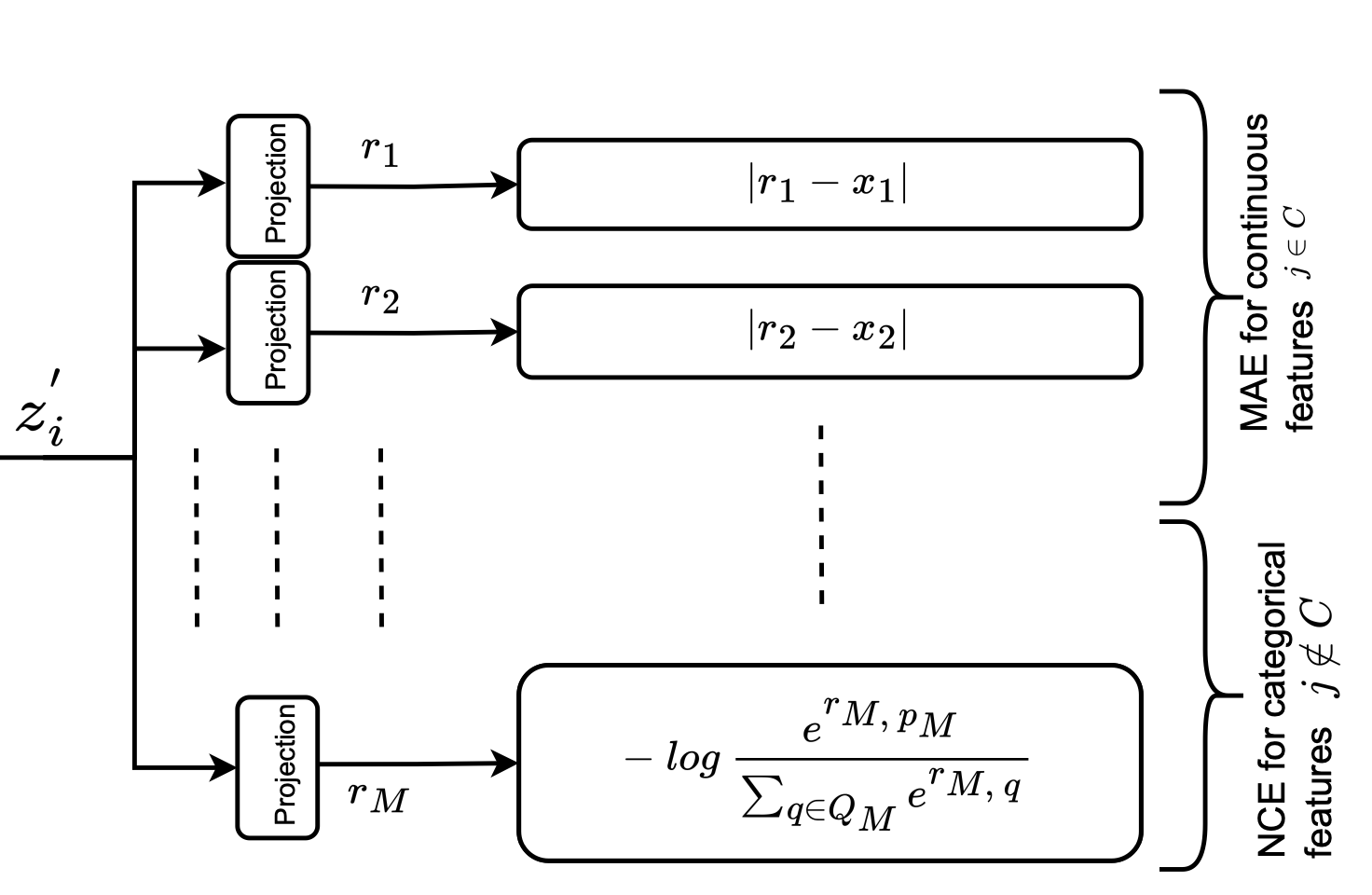

NeurIPS 2022 Workshop on Table Representation Learning Sharad Chitlangia, Anand Muralidhar, Rajat Agarwal We introduce a method for self supervised learning on large scale tabular data and show its efficacy large scale bot detection consisting of very high cardinality categorical and large range continuous features. PDF BibTeX |

|

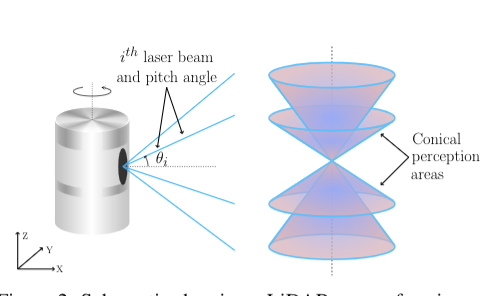

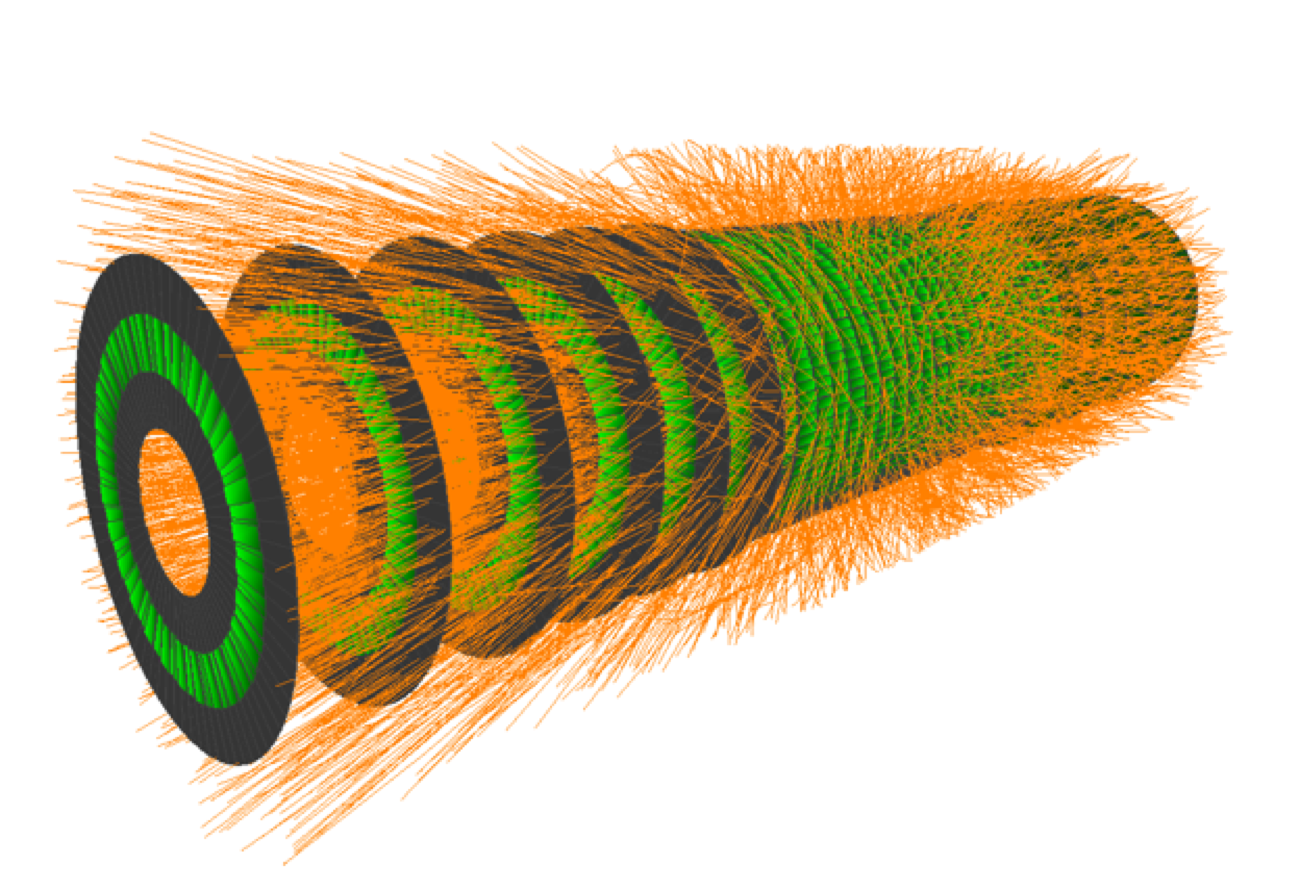

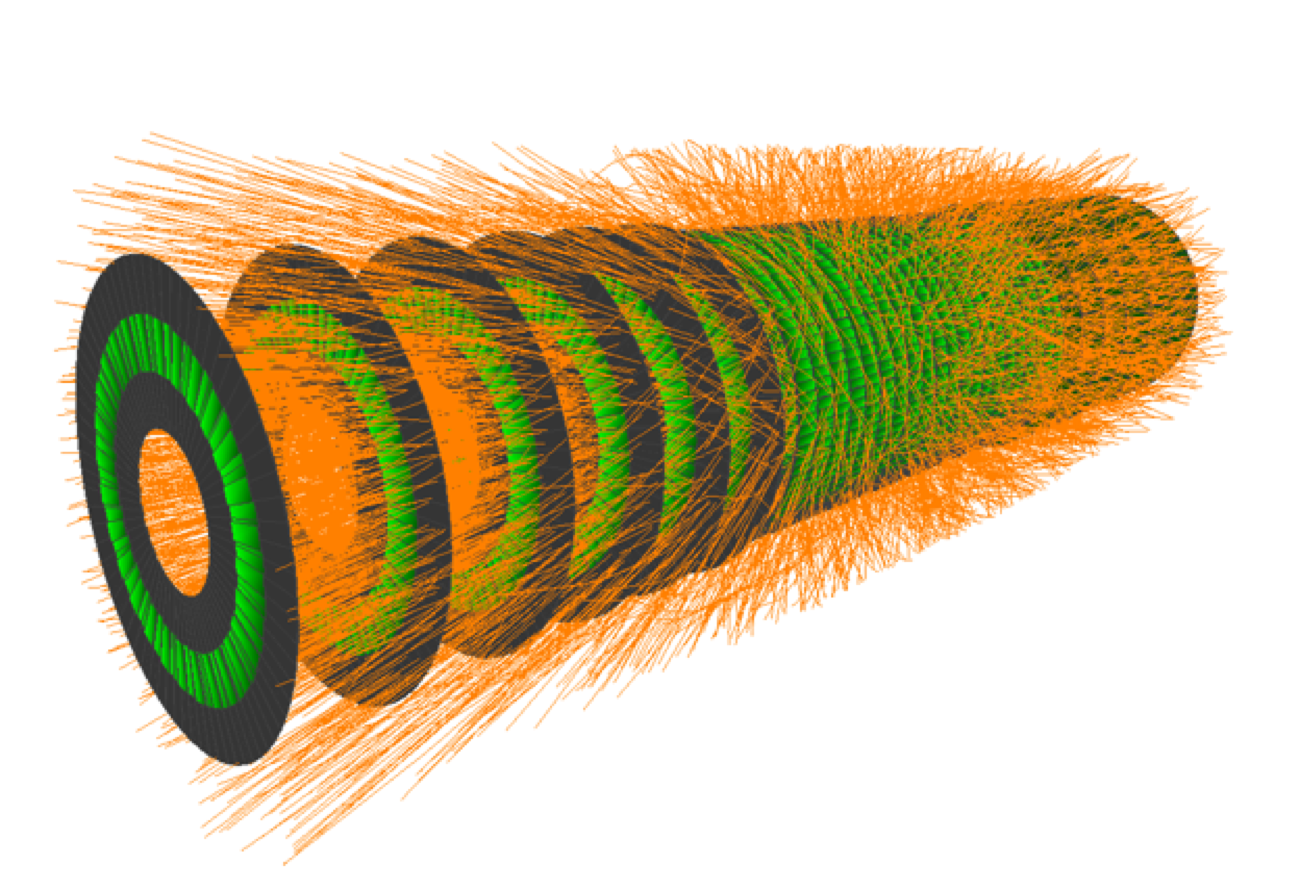

CVPR 2022 and Autonomous Driving: Perception, Prediction and Planning Workshop, CVPR 2021 Hanjiang Hu*, Zuxin Liu*, Sharad Chitlangia, Akhil Agnihotri, Ding Zhao A surrogate cost function is proposed to optimize placement of LiDAR Sensors so as to increase 3-d Object Detection performance. We validate our approach by creating a data collection framework in a realistic open source Autonomous Vehicle Simulator. PDF Talk BibTeX |

|

NeurIPS 2021 Mark Mazumder, Sharad Chitlangia, Colby Banbury, Yiping Kang, Juan Manuel Ciro, Keith Achorn, Daniel Galvez, Mark Sabini, Peter Mattson, David Kanter, Greg Diamos, Pete Warden, Josh Meyer, Vijay Janapa Reddi Multilingual Spoken Words Corpus is a speech dataset of over 340,000 spoken words in 50 languages, with over 23.7 million examples OpenReview PDF Dataset Code |

|

Elsevier Neural Networks Ajay Subramanian, Sharad Chitlangia, Veeky Baths We review a number of findings that establish evidence of key elements of the RL problem in the neuroscience and psychology literature and how they're represented in regions of the brain. PDF BibTeX |

|

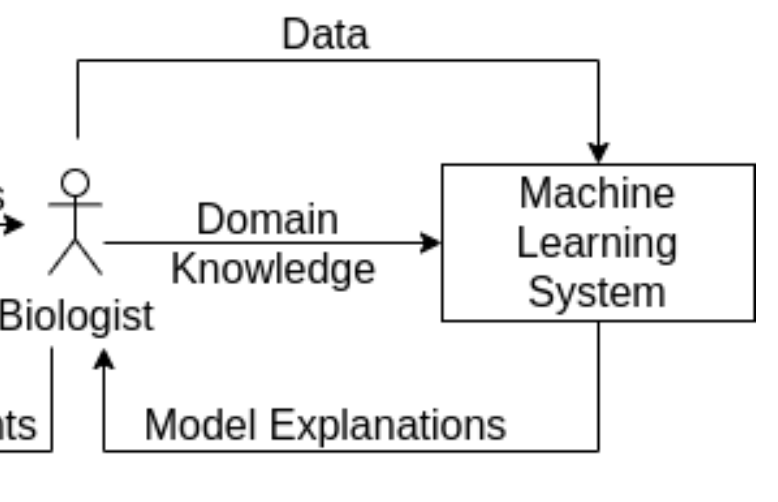

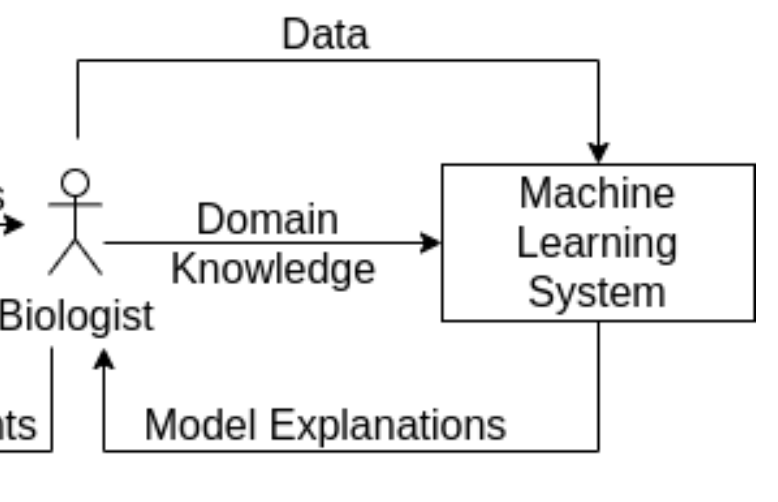

Nature, Scientific Reports Tirtharaj Dash, Sharad Chitlangia, Aditya Ahuja, Ashwin Srinivasan For the advancement of AI for Science, its important for us to be able to tell Machine Learning models what we might know about a particular problem domain in a concise form. We survey the current techniques used for incorporation of domain knowledge in neural networks, list out the major problems and justify how incorporating domain knowledge could be helpful from the perspective of explainability, ethics, etc. PDF BibTeX |

|

Harvard Data Science Review Vijay Janapa Reddi, Brian Plancher, Susan Kennedy, Laurence Moroney, Pete Warden, Anant Agarwal, Colby Banbury, Massimo Banzi, Matthew Bennett, Benjamin Brown, Sharad Chitlangia, Radhika Ghosal, Sarah Grafman, Rupert Jaeger, Srivatsan Krishnan, Maximilian Lam, Daniel Leiker, Cara Mann, Mark Mazumder, Dominic Pajak, Dhilan Ramaprasad, J. Evan Smith, Matthew Stewart, Dustin Tingley What went behind creating a massive community on tinyML comprising of leading academic and industrial individuals working at the intersection of Machine Learning and Systems? A Whitepaper on the tinyML EdX Professional Certificate Course and the much broader tinyMLx community which garnered over 35000 learners from across the world in less than 6 months. PDF Website BibTeX |

|

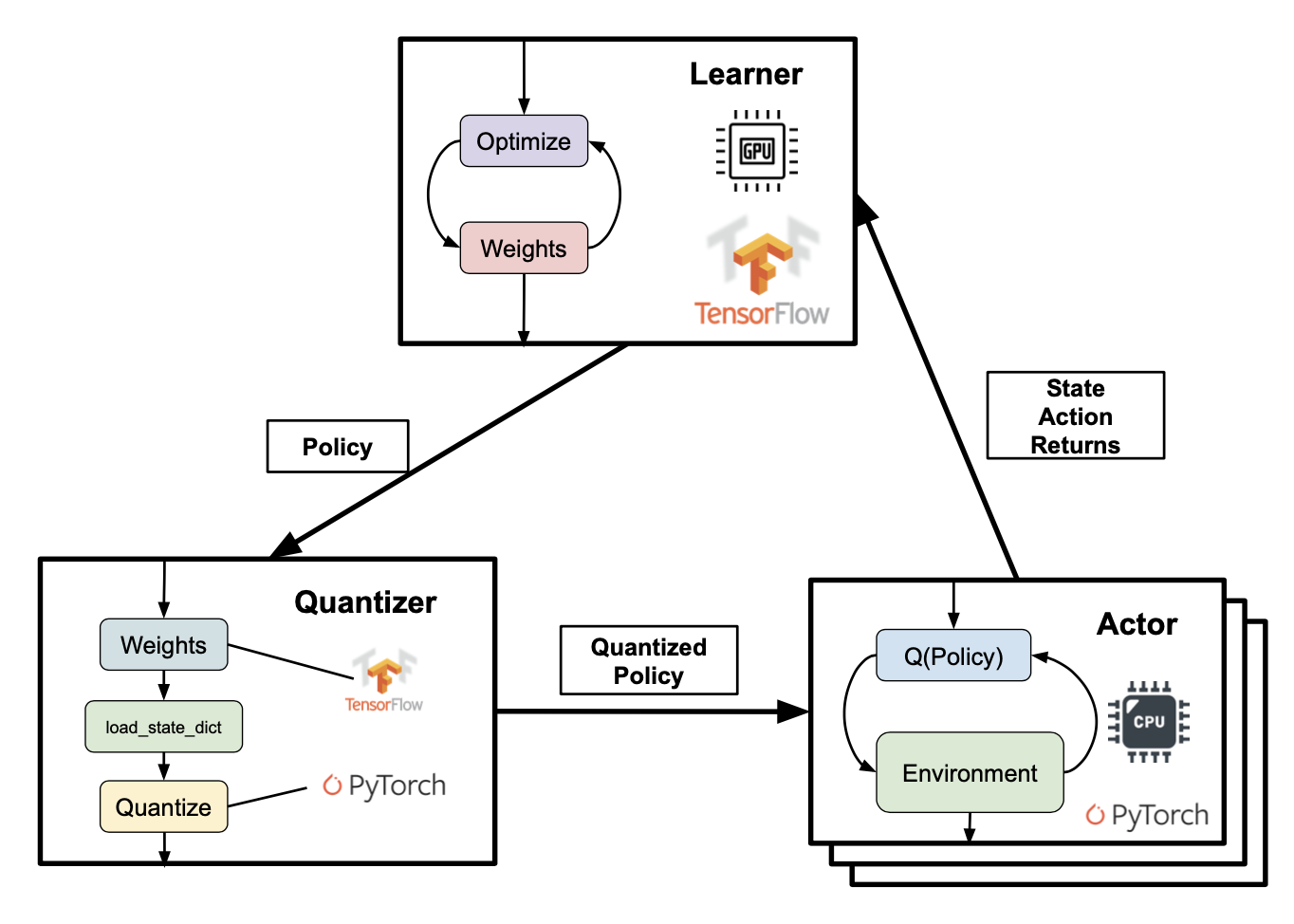

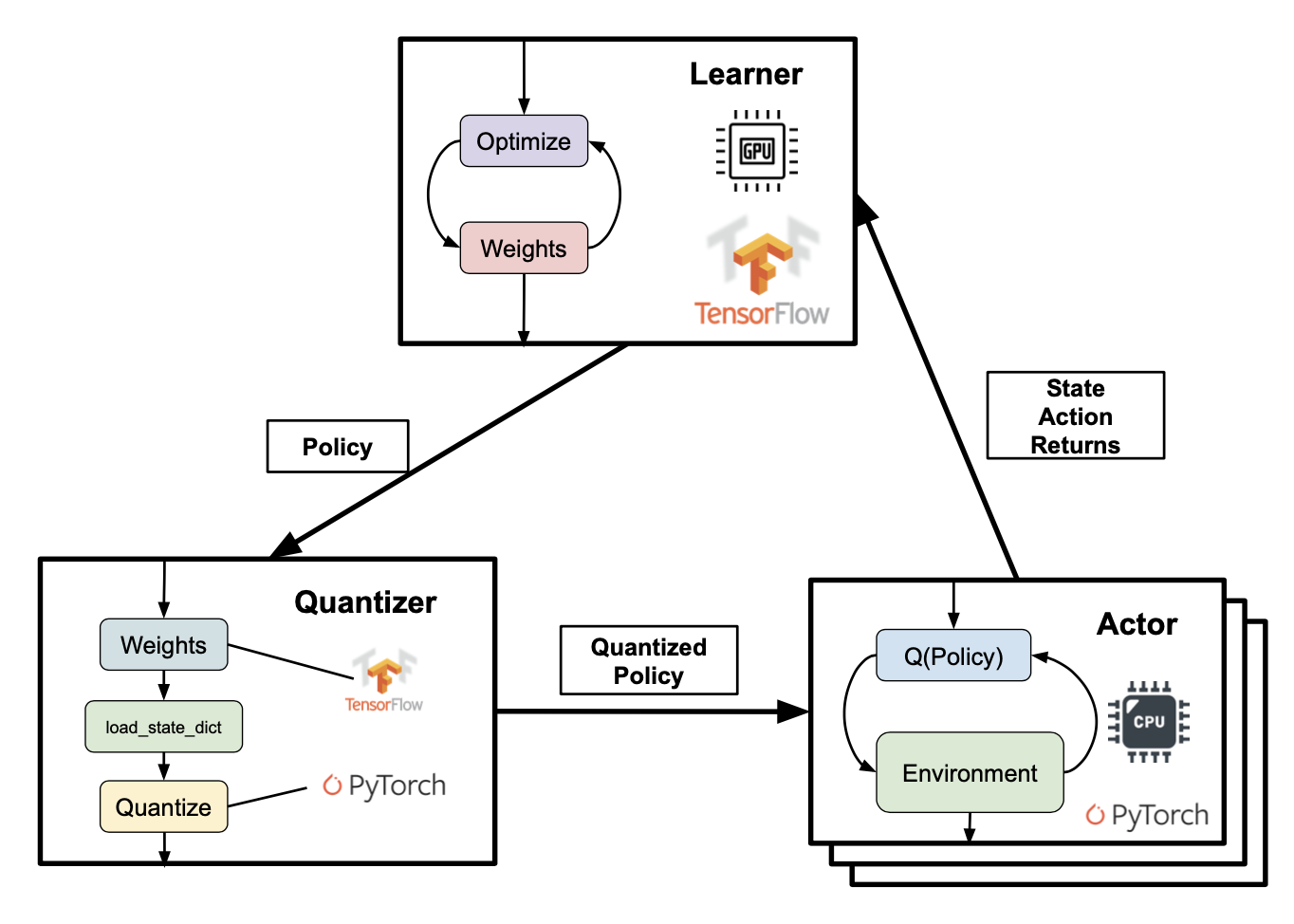

Hardware Aware Efficient Training Workshop at ICLR, 2021 Maximilian Lam, Sharad Chitlangia, Srivatsan Krishnan, Zishen Wan, Gabriel Barth-Maron, Aleksandra Faust, Vijay Janapa Reddi Speeding up reinforcement learning training is not that straightforward due to a continuous environmental interaction process. We demonstrate that by running parallel actors on a lower precision, and the learner in full precision, training can be sped up by 1.5-2.5x without harm in any performance (and at times better, due to noise induced by quantization)! PDF Poster Code BibTeX |

|

MLSys ReCoML workshop, 2020 Srivatsan Krishnan, Sharad Chitlangia, Maximilian Lam, Zishen Wan, Aleksandra Faust, Vijay Janapa Reddi Does quantization work for Reinforcement Learning? We discuss the benefits of applying quantization to RL. Motivated by few results on PTQ, we introduce an algorithm ActorQ and show how quantization could be used in the actor learner distributed setting for speedup by upto 5 times! Arxiv Code BibTeX Poster |

|

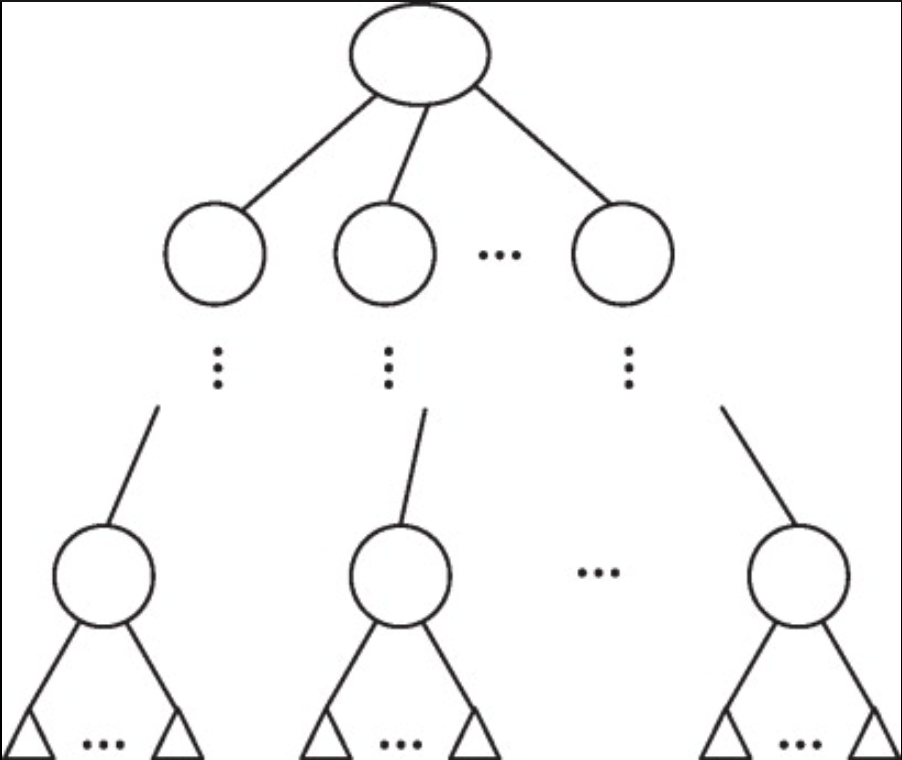

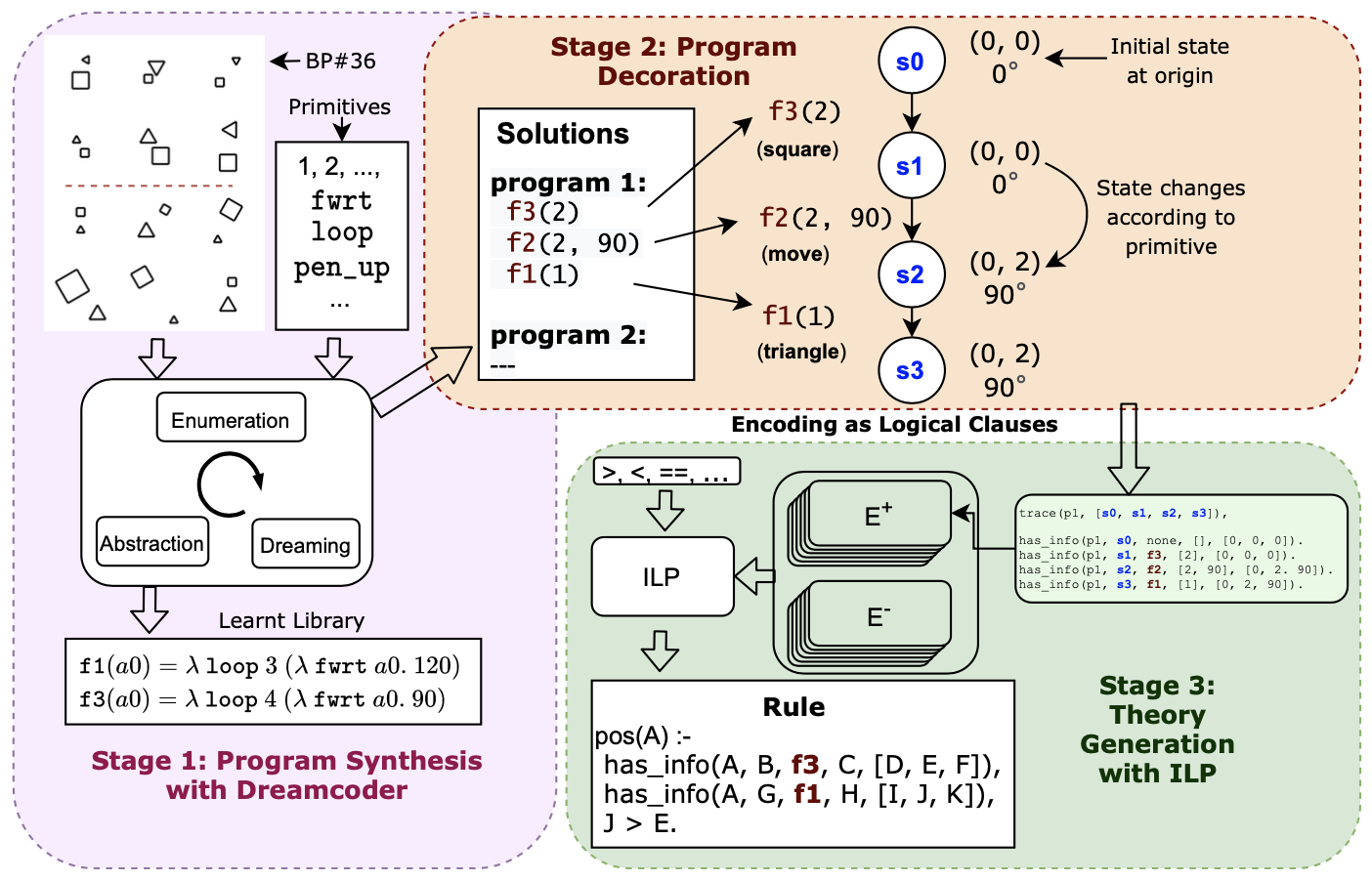

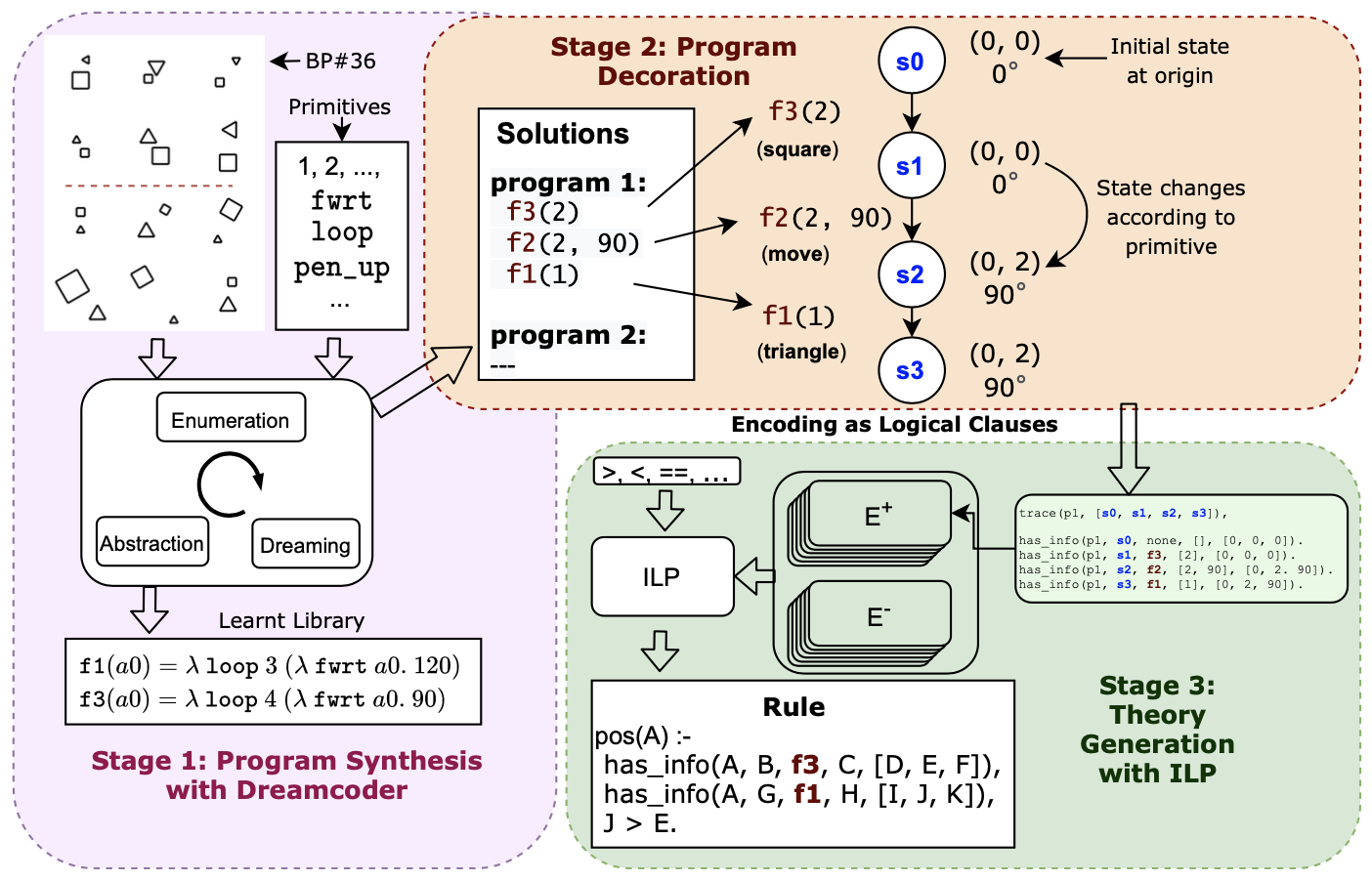

10th International Workshop on Approaches and Applications of Inductive Programming Sharad Chitlangia, Atharv Sonwane, Tirtharaj Dash, Lovekesh Vig, Gautam Shroff, Ashwin Srinivasan We utilise graphical program synthesis to synthesize programs that represent Bongard problems, to serve as generative representations of those Bongard problems. We show that these can serve as good representations for learning interpretable discrimiative theories using Inductive Logic Programming to solve Bongard Problems. Arxiv Poster BibTeX |

|

|

|

|

|

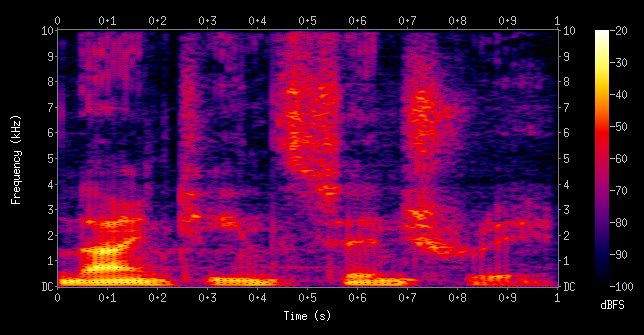

Data Engineering for Zero/Few Shot Multilingual Keyword Spotting |

|

Inductive Programming and its applications to Bongard Problems |

|

Work on Optimal LIDAR placement. |

|

Worked on Query Disambiguation and Intent Mining from Search Queries for improving Catalog Quality. |

|

VowpalWabbit is known for its abilitiy to solve complex machine learning problems extremely fast. Through this project, we aim to take this ability, even further, by the introduction of Flatbuffers. Flatbuffers is an efficient cross-platform serialization library known for its memory access efficiency and speed. We develop flatbuffer schemas, for input examples, to be able to store them as binary buffers. We show a performance increase of upto 30%, compared to traditional formats. |

|

Working on use of Online Bayesian Reinforcement Learning for Meta-Learning and Automatic Text Simplification. |

|

Work on Interpretable and Explainable AI on Deep Relational Machines using Causal Machine Learning. Showed that features that have high Causal Attribution preserve learning and on back tracking features to learning rules, they turn out to cover more example cases than others. Part of the TCS-Datalab |

|

Worked at intersection of Deep Reinforcement Learning and Energy Efficiency for Drones. Extensive use of Tensorflow and TFLite. Performed >350 experiments to show effects of Quantization in RL, Quantization during training to be a better regularizer than traditional techniques and thus enable higher exploration and generalization. |

|

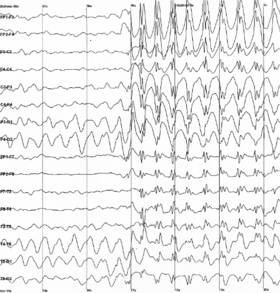

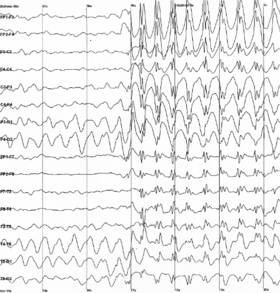

Particle Track Reconstruction using Machine Learning. Ported top solutions from TrackML challenge to ACTS Framework. Added an example of running pytorch model in ACTS using Pytorch’s C++ frontend libtorch in an end-to-end fashion to enable rapid testing of models and thread safe fashion to allow massive parallel processing. Some testing with GNNs In the final product, it was possible to do a simulation producing more than 10,000 particles and 100K trajectories and perform reconstruction with over 93% accuracy in less than 10 seconds. Among other things, I also added an example of running a PyTorch model using the C++ frontend, libtorch. |

|

Revamped the existing Information Retrieval system to focus more on distributional semantics. Developed embeddings from a deep learning based model which could capture Semantic, Syntactic as well as Contextual information - ELMo. Training and deploying Stance Detection models - ESIM |

|

I've worked in various sub-fields of AI. Ranging from Computer Vision to Speech Synthesis, Particle Physics to Reinforcement Learning. Please take a look at my projects below to know more. |

|

A PyTorch reinforcement learning library centered around reproducible and generalizable algorithm implementations.. Code |

|

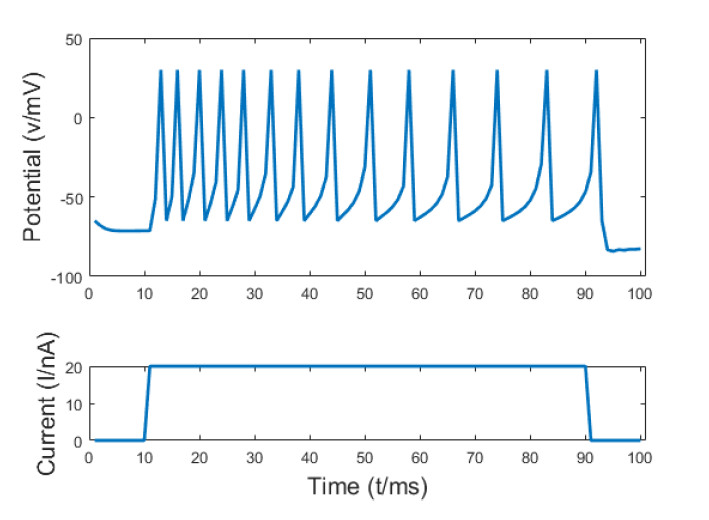

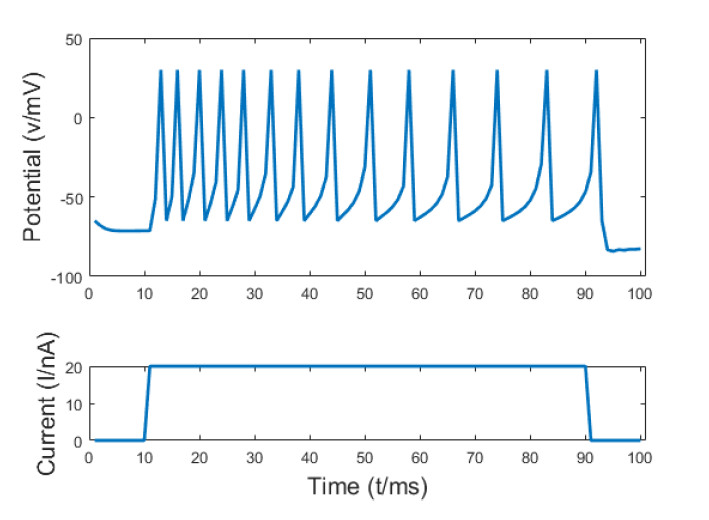

With the 3rd generation of Neural Networks coming up i.e., Spiking Neural Networks, we explore their real time interfacing capabilities of Conducatance based neurons in one of the up and coming softwares - SpineCreator. Report |

|

Implementation of Neural Voice Cloning with Few Samples Paper by Baidu Research. Audios Code |

|

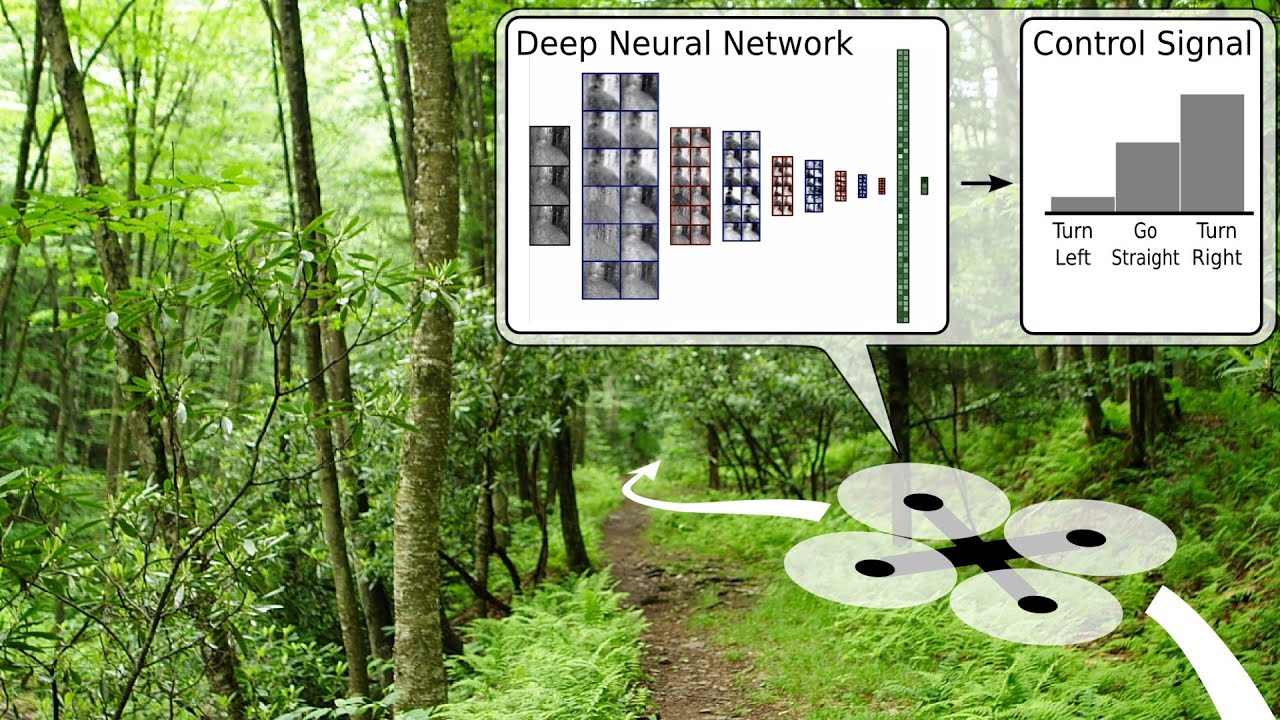

Drone performing imitation learning on IDSIA dataset. ResNet for Image Classification. Code |

|

Reconstruction of particle tracks using ML. Explored models {Random Forests, XGBoost, Feedforward Neural Networks for pair classification, Graph Neural Networks for Edge Classification.} Code Project Report |

|

An open source implementation of ChronoNet. Code Project Report |

|

Research Project in unofficial collaboration with TCS Research. Applying State of the art models for pneumonia detection on RSNA pneumonia detection dataset. Tested InceptionNet-v3, DenseNet121 and explored Mask RCNN applicability for the dataset. Got 83.8% and 77.9% classification accuracy respectively. Code |

|

|